Deep Learning - CNN - Convolutional Neural Network - Padding & Strides Tutorial

Padding is simply a process of adding layers of zeros to our input images. The purpose of padding is to preserve the original size of an image when applying a convolutional filter and enable the filter to perform full convolutions on the edge pixels.

When we apply filter/kernel on the Original Image the resultant image i.e. feature map will be small in size in short information will get loose.

Also, the image's middle portion will get higher priority than its side portion due to the small filter/kernel. therefore, again it will lose information.

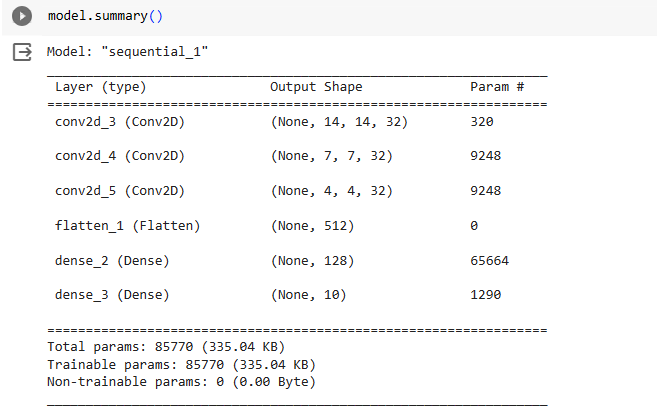

For example, original image size is 5*5 and the filter size is 3*3, then the feature map size will be (5-3+1)*(5-3+1) = 3*3. In which we are losing the original image size and also the crucial information of edges.

So to prevent this-

We will be using padding of size 2 (i.e. original image(5) – feature map(3)). It is also known as zero padding because we are padding it with 0.

hence the our new image size will be 7*7, after applying filter of 3*3, the feature map size will be (7-3+1)*(7-3+1) i.e 5*5.

Which prevents the image from losing the original image size and also the crucial edge information.

Its formula is (n+2p-f+1) =(5+2(1)-3+1) = 5

where n is the size of the original image, p is padding, and f is the size of the filter.

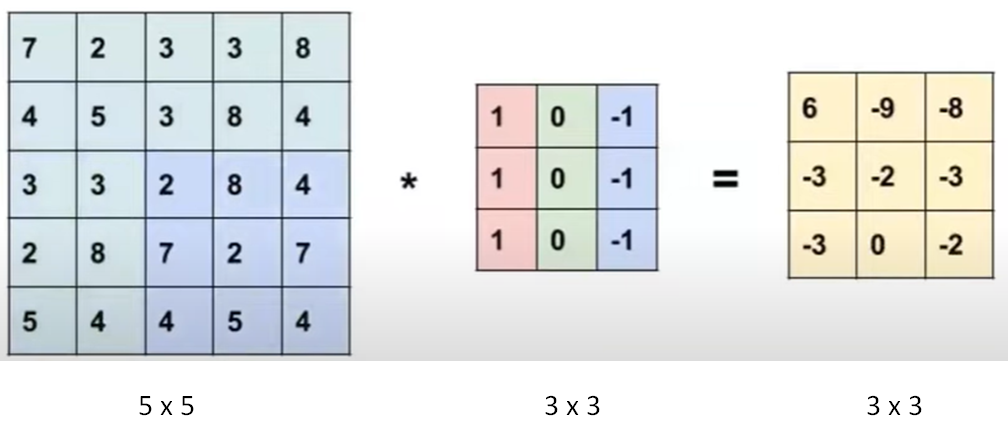

In the case of keras, there are two types of padding options i.e. valid(without padding) and same(resultant will be same as original image).

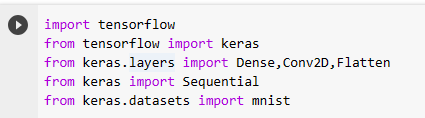

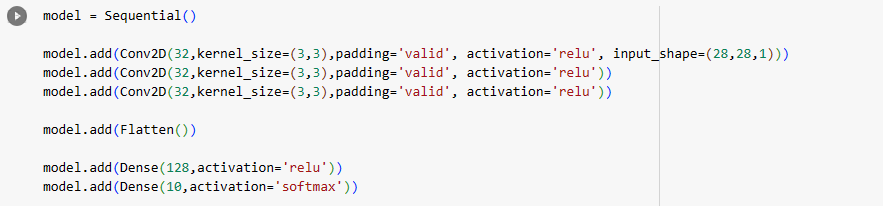

For padding = valid

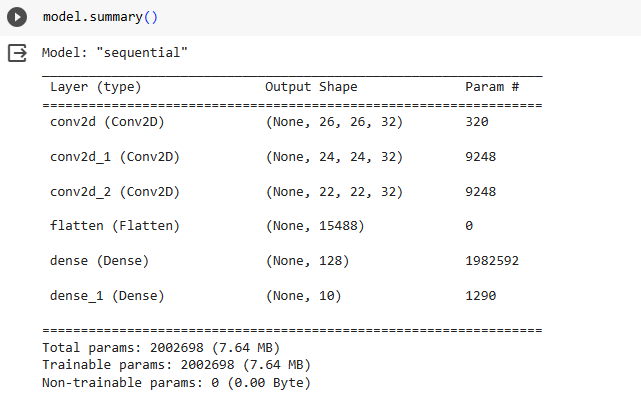

For padding = same

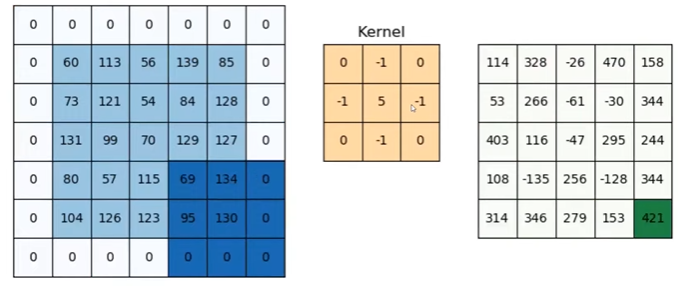

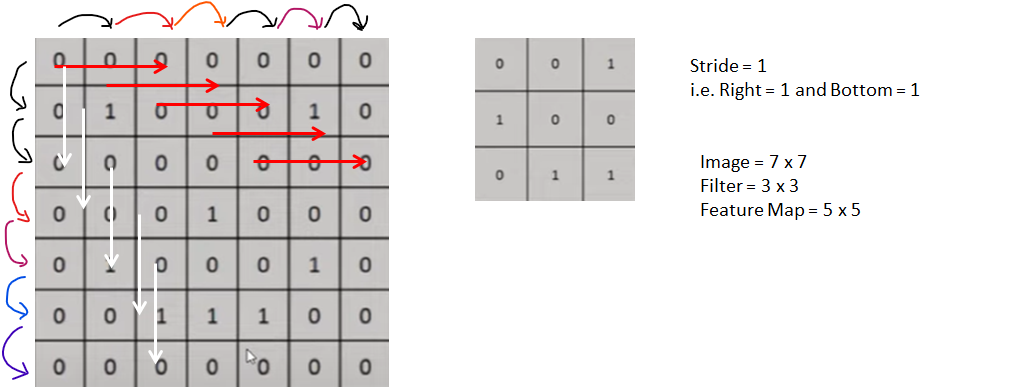

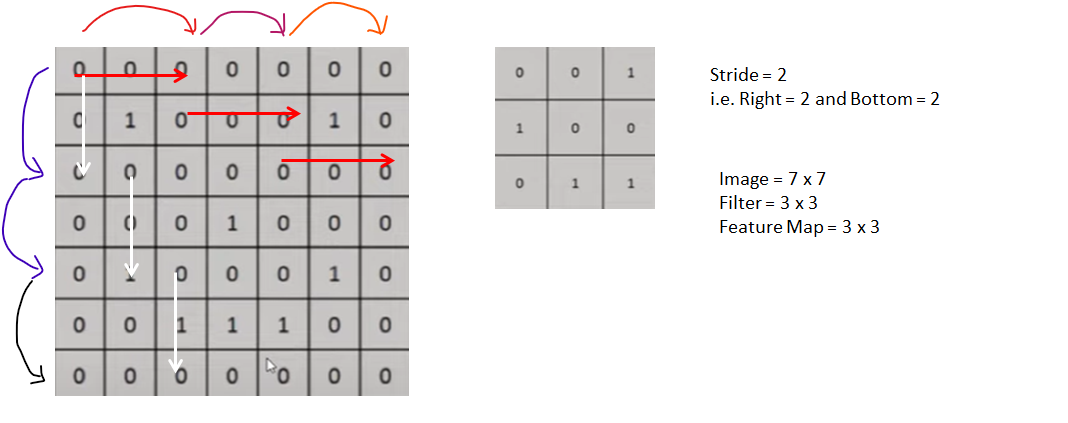

Stride in the context of convolutional neural networks describes the step size of the kernel when you slide a filter over an input image. With a stride of 2, you slide the filter by two pixels at each step.

It will decrease the size of the resultant image(feature map) as the stride increases.

Formula-

(n - f + 1 )

→ [\(\frac{ n - f}{s} + 1\)] (with Stride)

→ [\(\frac{ n + 2p - f}{s} + 1\)] (with Padding)

if input image size is 7*7, padding =1, filter image=3*3, stride =2

then resultant image will be = (7+2-3)/2 + 1 = 4*4

Hence, information loss is there.

If the stride is more than 1, then it is strided convolution.

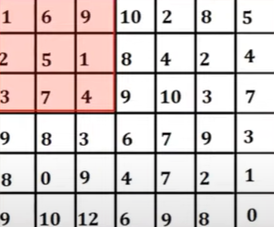

Special Case of 7 x 6 Image and Stride = 2, then

When there are fewer pixels to do stride operation, then there is floor operation.

for 7→ [\(\frac{ n - f}{s} + 1\)] (with Stride) = → [\(\frac{ 7- 3}{2} + 1\)] = 2+1 = 3

for 6→ [\(\frac{ n - f}{s} + 1\)] (with Stride) = → [\(\frac{ 6 - 3}{2} + 1\)] = 1.5+1 = 2.5 using floor it will be 2.

therefore, the new image will be 3 x 2

Why is Strides Convolution Required?

1] When you just want a high-level feature from an image

2] Increase computation

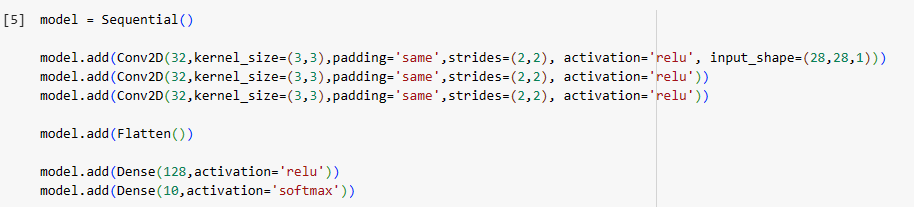

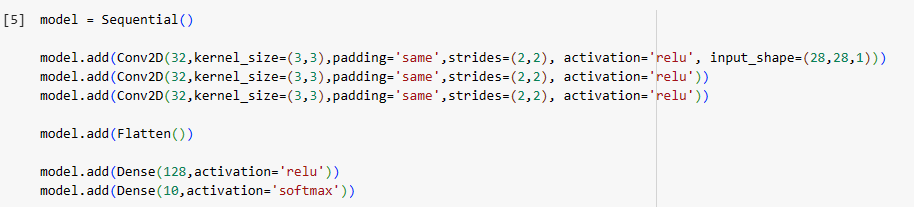

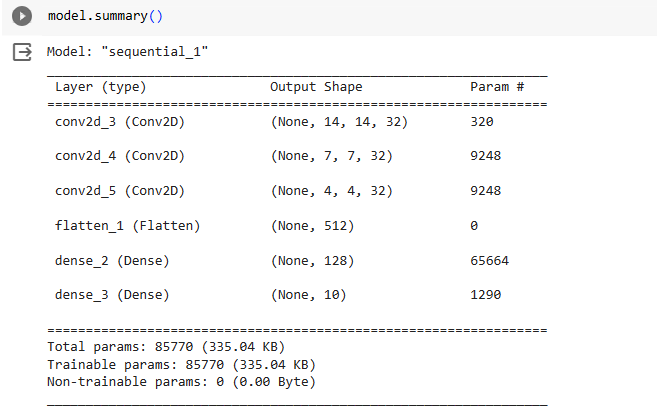

In Keras-