Deep Learning - CNN - Convolutional Neural Network - Pretrained Model & Transfer Learning Tutorial

What is a pre-trained Model?

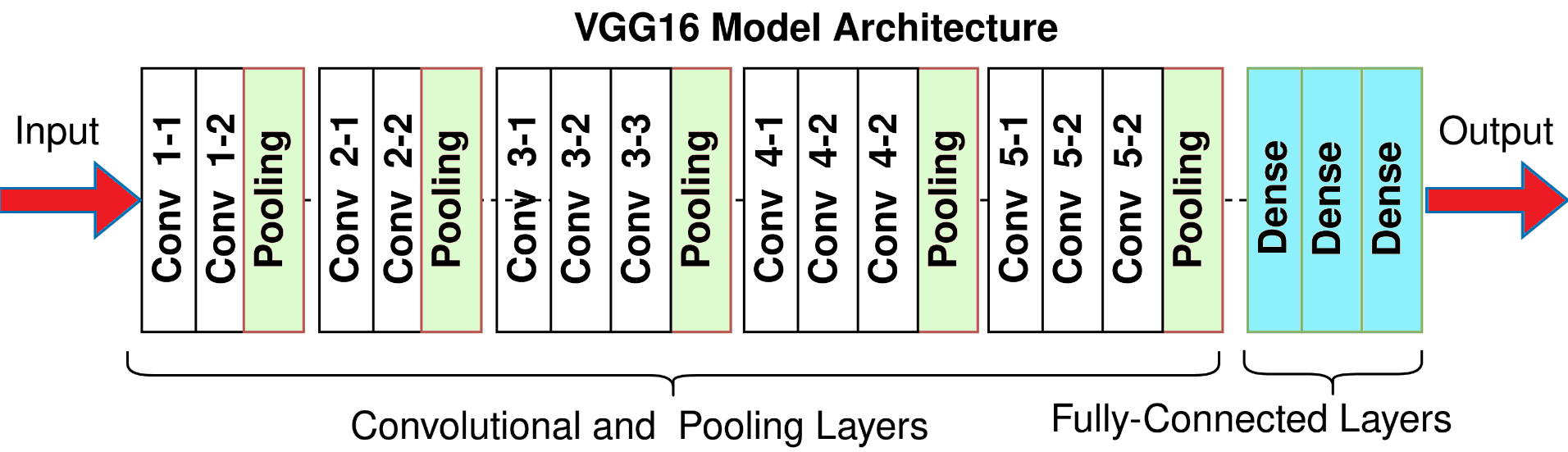

A pre-trained model is a model created and trained by someone else to solve a problem that is similar to ours. In practice, someone is almost always a tech giant or a group of star researchers. They usually choose a very large dataset as their base datasets such as ImageNet or the Wikipedia Corpus. Then, they create a large neural network (e.g., VGG19 has 143,667,240 parameters) to solve a particular problem (e.g., this problem is image classification for VGG19). Of course, this pre-trained model must be made public so that we can take these models and repurpose them.

Why use a pre-trained Model?

1] The deep learning model is data-hungry

For example, organization XYZ has 1 crore unlabelled images and they want to label the image. In that case, the organization needs to hire the employee to label the image manually. And has to give salary to the employee which is a financial loss for them.

But if other organizations ABC want labeled images, they will not hire a new employee to create labeled images, instead, they will ask organization XYZ to provide them pre-trained or Prelabelled Images at a low cost which will save their cost and organization XYZ will get recovered from financial loss.

2] More time required in model training

Some data will take more time than a week to get trained.

In the previous example, the organization will not only save cost but will also save time using the Pretrainedmodel

ImageNET Dataset

It is a Visual Database of images created by Fei Fei Li in 2006

ImageNET Dataset is a collection of 1.4 crore images of 20000 categories with labels.

10 lakh images are bounding box(used for object localization)

How was this big dataset created?

Using crowdsourcing i.e. asking each individual about the label that is given to the image. Crowdsourcing can be done in any form like survey or MCQ, etc.

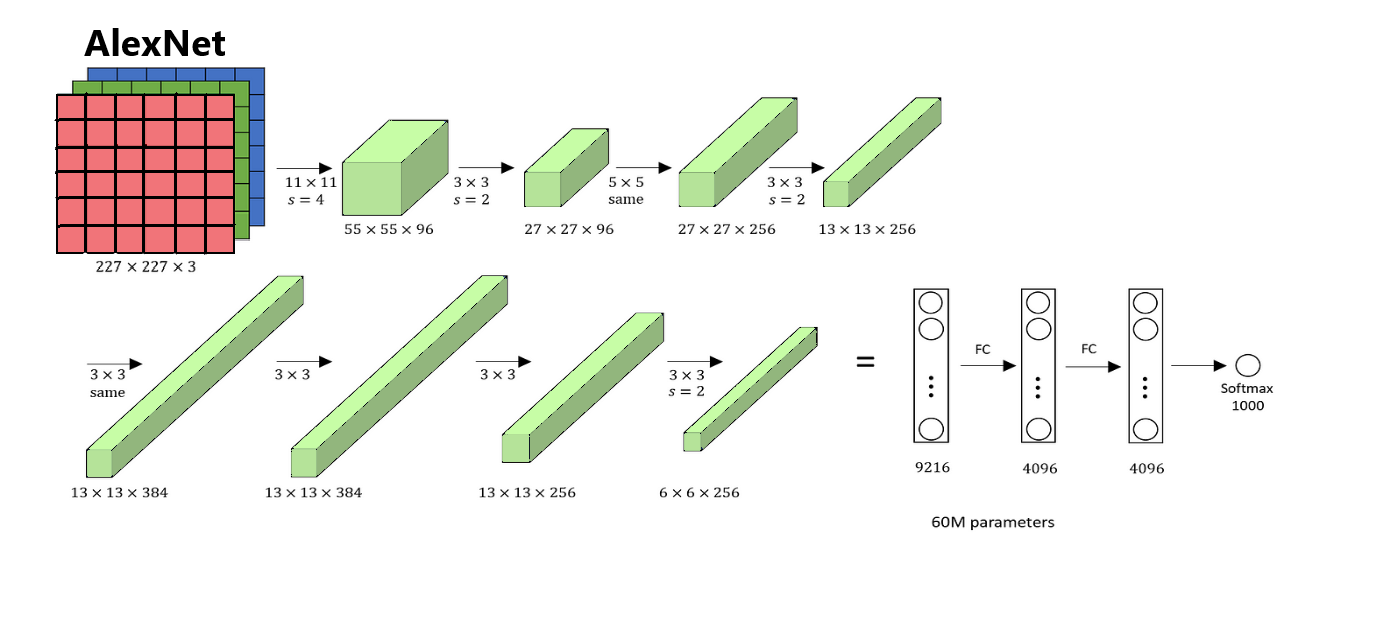

AlexNET – used CNN, GPU and relu

Famous Architecture

| Year | Architecture | Error Rate |

| 2010 | ML Model | 28% |

| 2011 | ML Model | 25% |

| 2012 | AlexNet | 16.4% |

| 2013 | ZFNet | 11.7% |

| 2014 | VGG | 7.3% |

| 2015 | GoogleNet | 6.7% |

| 2016 | ResNet | 3.5% |

Keras already has a pre-trained model available - Keras Applications

How to Visualize Filters and Feature Maps in Convolutional Neural Networks

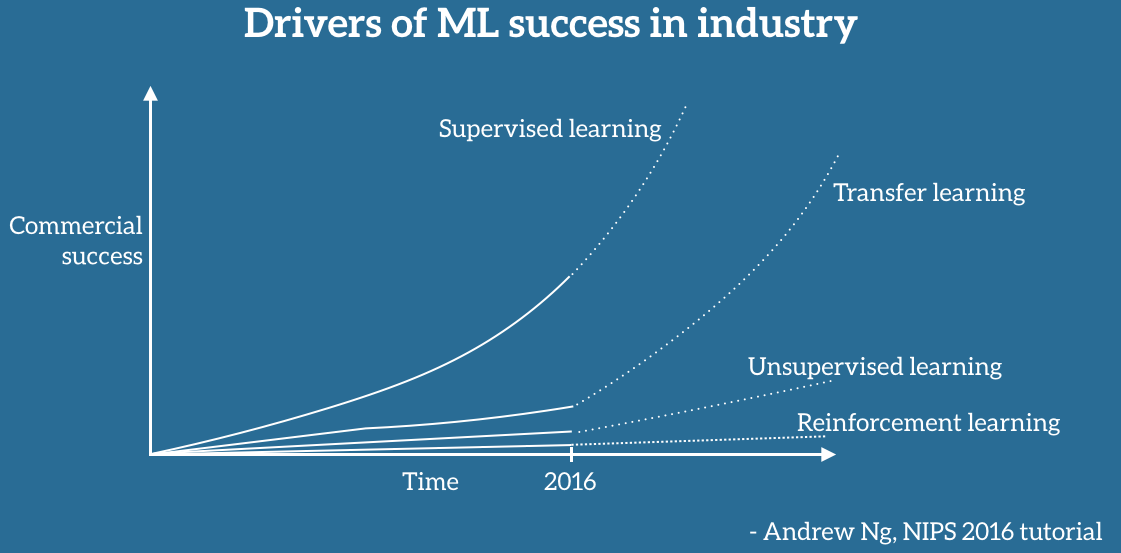

What is transfer learning?

Transfer learning is a research problem in machine learning that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem.

For example, knowledge gained while learning to recognize cars could apply when trying to recognize trucks.

Problem with your own model -

1] Data Hungry - labelled data

2] Lots of time to train models from scratch

Solution -

Using pretrained models like ImageNET dataset(1.4 million, 1000 category), VGGNet, ResNET and XCEPTION

Disadvantage of PreTrained Model -

But suppose the category we want is not present in the pre-trained model, in that case, we use transfer learning.

In transfer learning, suppose we have a pre-trained model VGG16, then we will cut the convolution layer (Image Spatial Info) and combine it with our own Fully Connected layer(Classification).

When you again do training, it will train only the Fully Connected layer, the convolution layer is frozen.

Ways of doing transfer learning are-

1] Feature Extraction

In this, we will replace the last Dense Layer with our own Dense Layer i.e. freezing Convolution Layer and Training Fully Connected Layer.

For example - In image classification tasks such as cat and dog classification, you will get many pre-trained models in which cat and dog images are already trained in the convolution layer, the only thing remaining to train is your own replaced fully connected layer.

2] Fine Tuning

In this, you will replace and re-train the last convolution layer and fully connected layer.

It is applied when the pre-trained model is very different from your own model.

Transfer Learning Feature Extraction(Without-Data-Augmentation) Practical

Transfer Learning Feature Extraction(With-Data-Augmentation) Practical