Deep Learning - RNN - Recurrent Neural Network - Backpropagation in RNN Tutorial

Taking the example of many-to-one RNN i.e Sentiment analysis

| Review Input | Sentiment |

|---|---|

| movie was good | 1 |

| movie was bad | 0 |

| movie not good | 0 |

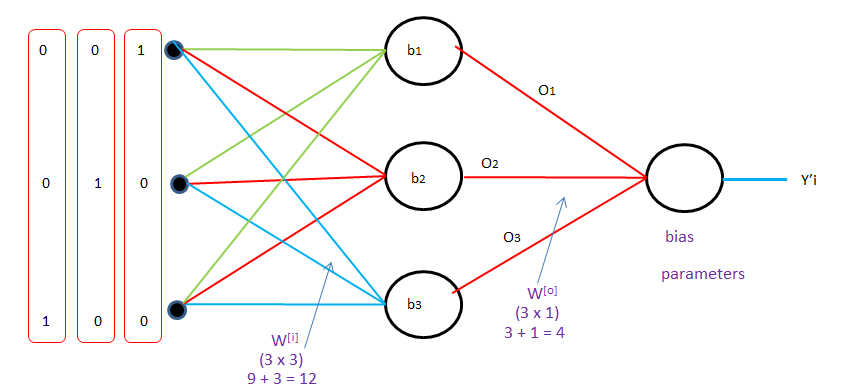

No. of the unique word - 5 i.e 'movie', 'was', 'good', 'bad', and 'not'

| movie | was | good | bad | not |

| [1, 0, 0, 0, 0] | [0, 1, 0, 0, 0] | [0, 0, 1, 0, 0] | [0, 0, 0, 1, 0] | [0, 0, 0, 0, 1] |

Converting it into vector-

| Review Input | Sentiment |

|---|---|

| [1, 0, 0, 0, 0] [0, 1, 0, 0, 0] [0, 0, 1, 0, 0] | 1 |

| [1, 0, 0, 0, 0] [0, 1, 0, 0, 0] [0, 0, 0, 1, 0] | 0 |

| [1, 0, 0, 0, 0] [0, 0, 0, 0, 1] [0, 0, 1, 0, 0] | 0 |

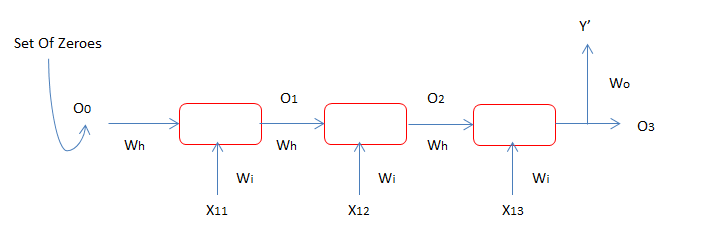

O1 = f(X11 Wi + OoWh )

O2 = f(X12 Wi + O1Wh )

O3 = f(X11 Wi + O2Wh )

Y' = σ(O3W0)

L = -Yi logY'i - (1 - Yi) log(1 - Y'i)

After Loss Calculation, we need to minimize the loss using Gradient Descent.

for that, we need to find Wi, Wh, and Wo such values after which the L will be minimized.

Wi=Wi−ηδLδWi

Wh=Wh−ηδLδWh

Wo=Wo−ηδLδWo

δLδW0=δLδY′δY′δW0

δLδWi=δLδY′δY′δO3δO3δWi+δLδY′δY′δO3δO3δO2δO2δWi+δLδY′δY′δO3δO3δO2δO2δO1δO1δWi

summarizing the above for j=3

δLδWi=3∑j=1δLδY′δY′δOjδOjδWi

for j = 1, it will be δLδY′δY′δO1δO1δWi=δLδY′δY′δO3δO3δO2δO2δO1δO1δWi

for j = 2, it will be δLδY′δY′δO2δO2δWi=δLδY′δY′δO3δO3δO2δO2δWi

for j = 3, it will be δLδY′δY′δO3δO3δWi=δLδY′δY′δO3δO3δWi

for j=n

δLδWi=n∑j=1δLδY′δY′δOjδOjδWi

δLδWh=δLδY′δY′δO3δO3δWh+δLδY′δY′δO3δO3δO2δO2δWh+δLδY′δY′δO3δO3δO2δO2δO1δO1δWh

similarly, we get δLδWh=n∑j=1δLδY′δY′δOjδOjδWh