Deep Learning - CNN - Convolutional Neural Network - Backpropagation in CNN Tutorial

What is BackPropagation?

It is an algorithm to train neural networks. It is the method of fine-tuning the weights of a neural network based on the error rate obtained in the previous epoch (i.e., iteration).

Backpropagation, short for "backward propagation of errors," is an algorithm for supervised learning of artificial neural networks using gradient descent. Given an artificial neural network and an error function, the method calculates the gradient of the error function with respect to the neural network's weights using the chain rule.

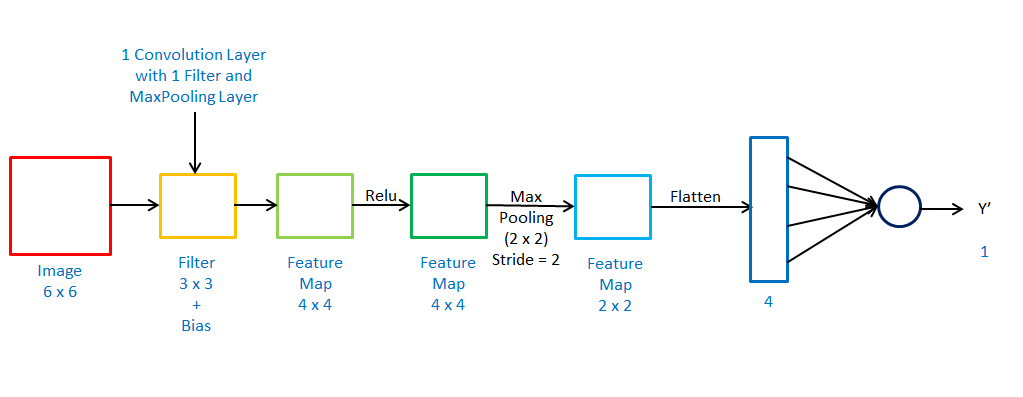

Total Trainable Parameters-

W1 = (3,3) and W2 = (1,4)

b1 = (1,1) and b2 = (1,1)

is equal to 15 trainable parameter

Loss for Binary Classification is

L = -Yi log(Y'i) - (1 - Yi) log(1 - Y'i)

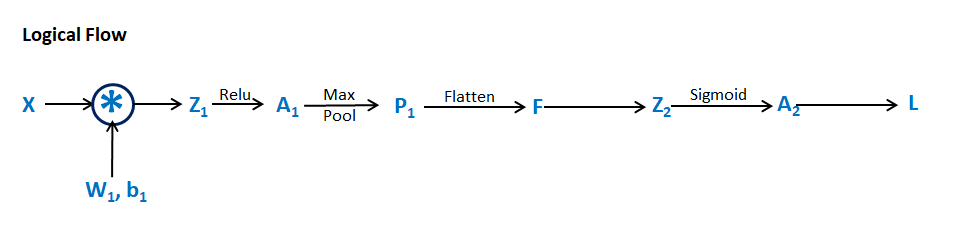

Forward Propagation-

Z1 = Conv(X, W1) + b1

A1 = Relu(Z1)

P1 = MaxPool(A1)

F = Flatten(P1)

Z2 = FW2 + b2

A2 = σ(Z2)

Backward Propagation-

we have to apply a gradient descent algorithm on all trainable parameters till the loss is minimized

W1=W1−ηδLδW1

b1=b1−ηδLδb1

W2=W2−ηδLδW2

b2=b2−ηδLδb2

Finding the derivative-

δLδW2=δLδA2×δA2δZ2×δZ2δW2

δLδb2=δLδA2×δA2δZ2×δZ2δb2

δLδW1=δLδA2×δA2δZ2×δZ2δF×δFδP1×δP1δA1×δA1δZ1×δZ1δW1

δLδb1=δLδA2×δA2δZ2×δZ2δF×δFδP1×δP1δA1×δA1δZ1×δZ1δb1

Denoting A2 Matrix as a2 single image

δLδa2 =δLδa2[−Yilog(a2)−(1−Yi)log(1−a2)]

= −Yiδa2+(1−Yi)(1−a2)=−Yi(1−a2)+a2(1−Yi)a2(1−a2) =−Yi+Yia2+a2−a2Yia2(1−a2)

=(a2−Yi)a2(1−a2)

δA2δZ2 =σ(Z2)[1−σ(Z2)] = a2[1 - a2]

δZ2δW2 = F

δZ2δb2 = 1

δLδW2 = (a2−Yi)a2(1−a2)×a2(1−a2)×F = (a2−Yi)FT = (A2−Y)FT

δLδb2= (a2−Yi)a2(1−a2)×a2(1−a2)×1 = (a2−Yi) = (A2−Y)

δZ2δF = W2

δFδP1 = reshape(P1.shape)

δLδA1={δLδP1xy,if Amn is the max element0,otherwise

δA1δZ1={1,if Z1xy > 00,if Z1xy < 0